Faceted search using Elasticsearch

Faceted search is a powerful feature that allows users to quickly refine their search results when browsing through the vast selection of items on Vinted. With almost 600 million unique items, the ability to narrow down search results is crucial for our users to find the perfect item quickly and efficiently. Additionally, faceted search allows for exploration, providing users with the ability to discover new items they may not have otherwise found. In this blog post, we’ll take a closer look at how we’ve implemented faceted search using Elasticsearch in Vinted.

Faceted search vs standard search filters

Faceted search differs from standard search filters both in the way the filter options are presented to the user, and the way the results are updated. With standard filters, the available filter options are not updated based on the current search results. This can lead to users clicking on an option and being presented with no results. Faceted search, on the other hand, dynamically updates the filter options based on current search results, allowing users to see how many items would be returned if they applied a particular filter. This way, a user can see the available filter options and how many items there would be if the filter was applied, preventing the user from getting zero results.

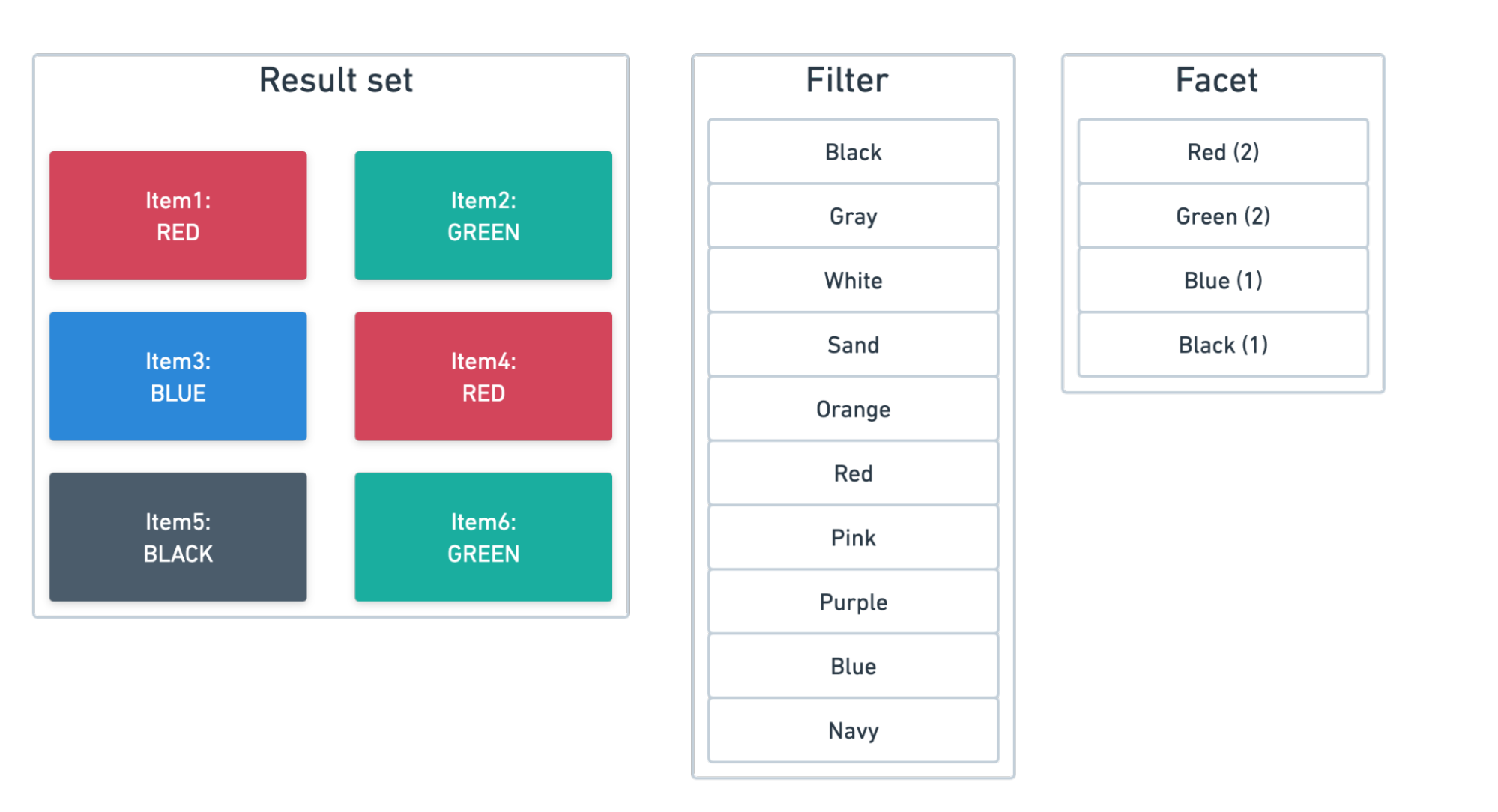

Let’s see an example:

Search results: 6 items of various colours

Filter: shows all colours. But if for example ‘Orange’ was clicked, there would be zero results, as there are no orange items in the search results

Facets: shows only available colours for the search results. No click will lead to zero results. Additionally, it shows how many items there would be if the filter was applied (in numbers).

Implementing faceted search at Vinted

Requirements:

- Facets respond with a 99.9% success rate

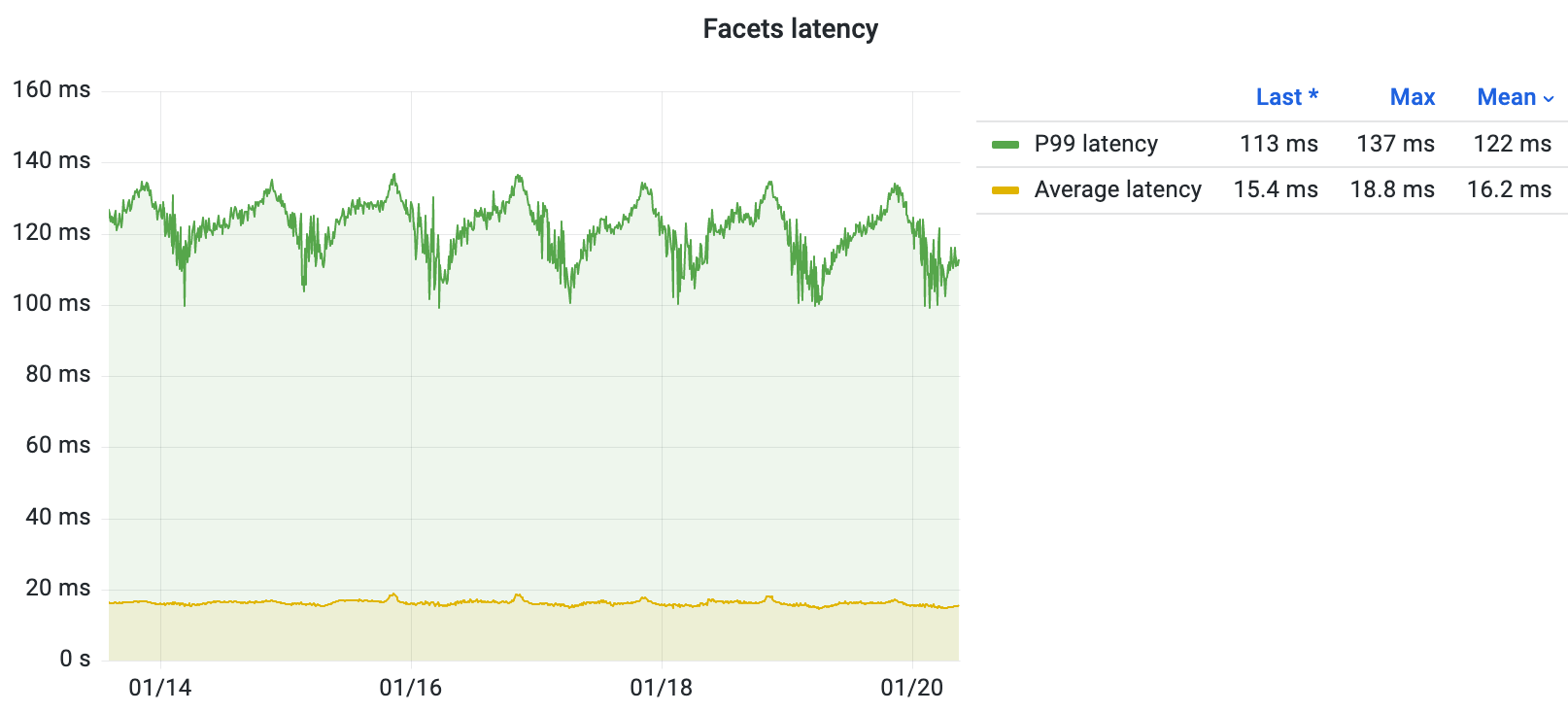

- P99 latency to get all facets is less than 150ms

- Facets for colours, sizes, brands

- The ability to calculate facet counts for up to 500 items, with any count above 500 represented as ‘500+’

- The ability to handle more than 2,000 search requests per second, each of which requires the calculation of facets

Deciding between combining query and aggregations in a single Elasticsearch request or sending them separately

Terms aggregation is a feature in Elasticsearch that allows the grouping of search results based on one or more fields, counting the number of documents in each group. This is exactly what faceted search needs, as it enables the calculation and display of the number of occurrences for each value of a specific field.

When using Elasticsearch for faceted search, another decision to make is whether to include query and aggregations in the same request, or send them separately.

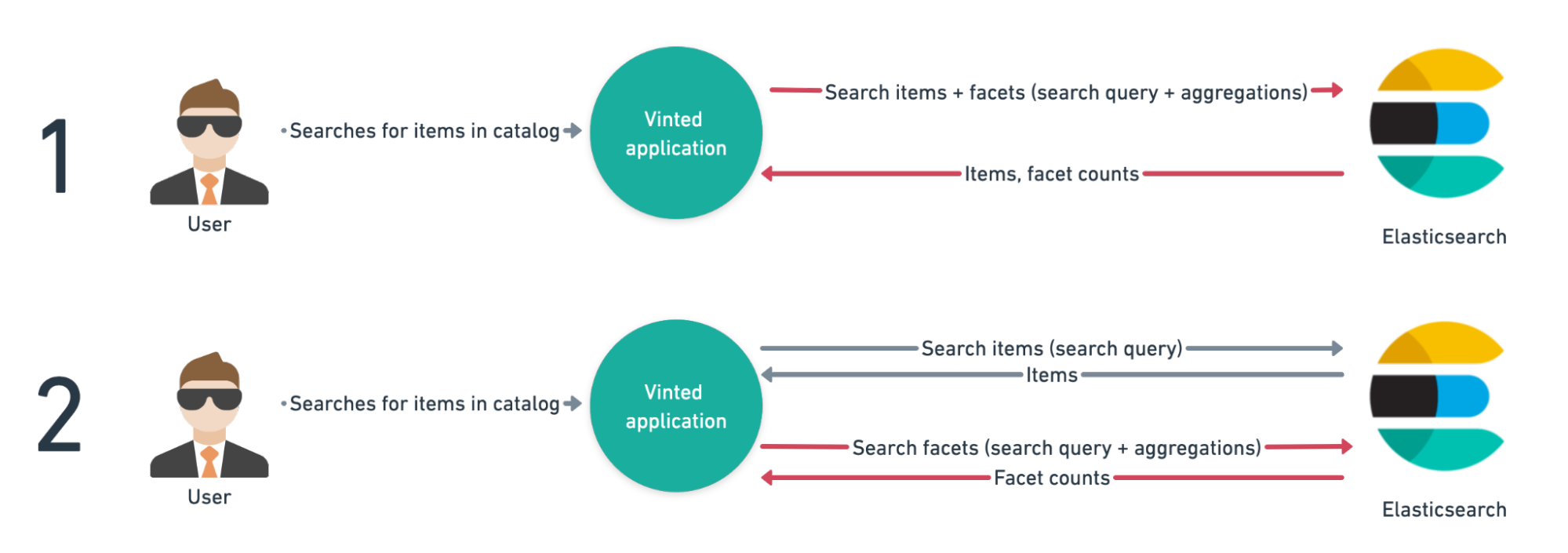

We have two options for sending aggregation requests to Elasticsearch:

- Combining item search and aggregations in the same Elasticsearch request

- Sending the aggregation request concurrently with the item search request

Option 1: Combining item search and aggregations in the same Elasticsearch request

- Pros: No increase in the number of Elasticsearch requests

- Cons: The item search request will become slower as it also has to calculate the aggregations

Option 2: Sending aggregations in separate Elasticsearch requests

- Pros: Decoupled item search and aggregation requests, the ability to modify the query for the aggregation request, and the ability to send facet requests to separate indices/clusters.

- Cons: The number of Elasticsearch requests will increase, causing a higher load on Elasticsearch.

Through offline testing, we found that Option 2 resulted in a 20% higher CPU usage on Elasticsearch, but the item search was ~71ms faster. After considering the pros and cons, we decided to move forward with Option 2.

Optimising Facet Requests

Now that we’ve decided to send aggregations in separate requests, let’s look at some ways we can optimise and speed up the facet requests.

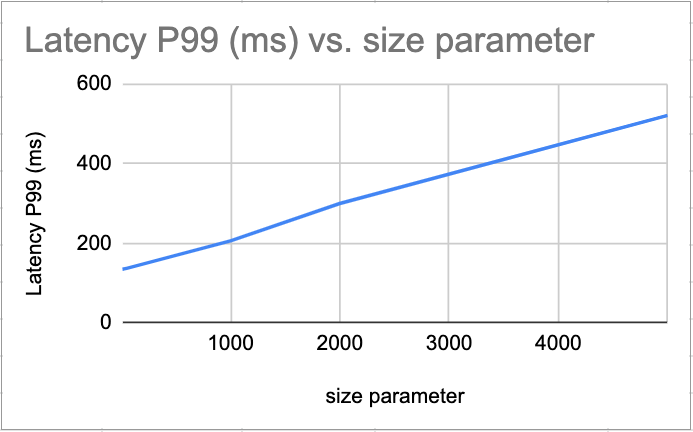

1. Setting the size parameter to 0

The size parameter defines the number of search results to return. By setting the size parameter to 0, we can eliminate the need for Elasticsearch to return any search results, as we’re only interested in the aggregations.

Additionally, it’s worth mentioning that setting the size parameter to 0 will only affect the number of search hits returned. It will not affect the number of aggregations returned, so you can still get the facets count.

2. Setting the terminate_after parameter

The terminate_after parameter in Elasticsearch limits the number of documents collected per shard and allows early termination of a query when the limit is reached.

For Vinted, this is especially useful for faceted search, as we only display counts of up to 500 for each facet. Therefore, there’s no need to calculate counts for millions of items when only a limited number of counts will be shown.

To optimise performance, we set the terminate_after parameter to 100,000.

This ensures that the facet counts are calculated for a sufficient number of items, while also reducing latency and CPU usage by limiting the size of the result set for broad queries.

This setting has proved crucial in improving the performance of faceted search in Vinted.

3. Reducing cardinality

Reducing cardinality refers to reducing the number of unique values in a particular field. High cardinality fields have a large number of unique values, which can cause latency issues.

Through testing, we found that high cardinality positively correlates with latency. For example, we discovered that the brand field had a cardinality of 2,000,000, which was causing latency issues. To solve this problem, we decided to only calculate facets for the brands verified by our brand specialists team. By reducing the brand field’s cardinality from 2,000,000 to 40,000, we were able to decrease latency (P99) by ~15ms.

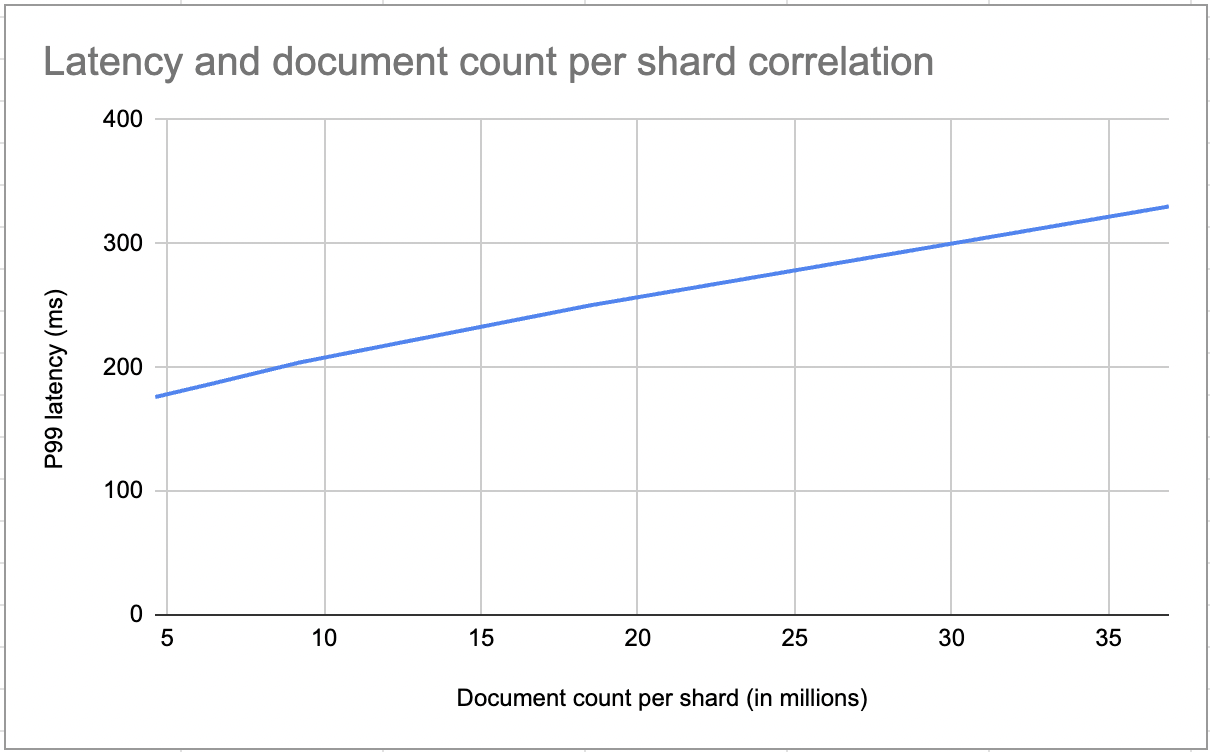

4. Trying out different shard sizes

Experimenting with different index shard sizes can be useful for improving latency. In our testing, smaller shards resulted in better latency.

However, we decided not to create new indices for facets because it would have led to 2x more disk space usage and additional complexities in re-indexing data and maintenance.

By not creating new indices for facets, we didn’t gain the 11ms improvement in latency that we could’ve had, but we made the trade-off to avoid additional costs and complexities.

5. Reduce the number of buckets to return

The size parameter in Elasticsearch’s terms aggregation determines how many top facet values are returned.

In our experiments, we found that setting a small size value for the brand facet greatly improves performance.

We decided to limit the number of brands returned to the top 50 for a given query

Caching

To improve performance, we use caching for faceted search requests in Elasticsearch by storing the facet responses in Memcached. This reduces the number of requests made to Elasticsearch, freeing resources, and making requests faster. When multiple users make the same search request, only one request is made to Elasticsearch.

However, it’s worth noting that this optimization comes at a cost of consistency. At Vinted’s C2C marketplace, every item upload and sale triggers the recalculation of facets, which can be expensive in terms of Elasticsearch infrastructure. This is because inventory at Vinted’s C2C marketplace is “one of everything”, which means that any change in inventory can impact the facet counts, and therefore the cached responses may not always be up-to-date.

It’s important to consider the cache duration as a balance, as a long cache duration could lead to users seeing outdated inventory counts, and a short duration would not provide enough benefit.

To determine the cache duration, we take the number of items in the result set into account. If the number of items is high, the difference in the facet count will not be noticeable to users because we display ‘500+’ for counts higher than 500. However, if the number of items in the result set is low, the difference in the facet count will be more noticeable to users, and therefore the cache duration should be shorter, to ensure that the facet counts are accurate and up-to-date.

Size, colour facet cache duration

| Item count | Cache duration |

|---|---|

| > 50,000 | 1 day |

| > 10,000 | 12 hours |

| > 1,000 | 1 hour |

| > 500 | 30 minutes |

| <= 500 | 0 |

Brand facet cache

We determine the cache duration for the brand facet by identifying the brand with the lowest number of items in the top 50 brands shown. This approach is based on the assumption that the least popular brand is the most likely to sell out. Therefore, we consider the cache duration based on this brand to be safe. Additionally, if the query returns less than 50 brands, we do not cache the facets at all, as a new item with a new brand may be added and not shown.

facets = {

“Nike”: 2000,

“New Balance”: 1000,

…,

“Adidas”: 20 ← Lowest facet value

}

| Lowest facet value | Cache duration |

|---|---|

| > 1,000 | 1 day |

| > 200 | 3 hours |

| > 50 | 30 minutes |

| <= 50 | 0 |

| Result set contains < 50 brands | 0 |

Simplifying queries to better re-use cache

We noticed that some parts of Elasticsearch search requests are not essential for facet counts calculation. One of the removed parts was the user ban filter. For example

- User 1: Has banned another user

- User 2: Didn’t ban anyone

They both searched for a ‘gaming console’, but the queries will be different. This is because User 2’s query will include the banned user filter, while User 1’s query will not. As a result, the queries will not be able to reuse the cache.

Although this may cause confusion for users as they’ll see counts from banned users, we determined that the increased cache hit ratio of 5% was worth the trade-off.

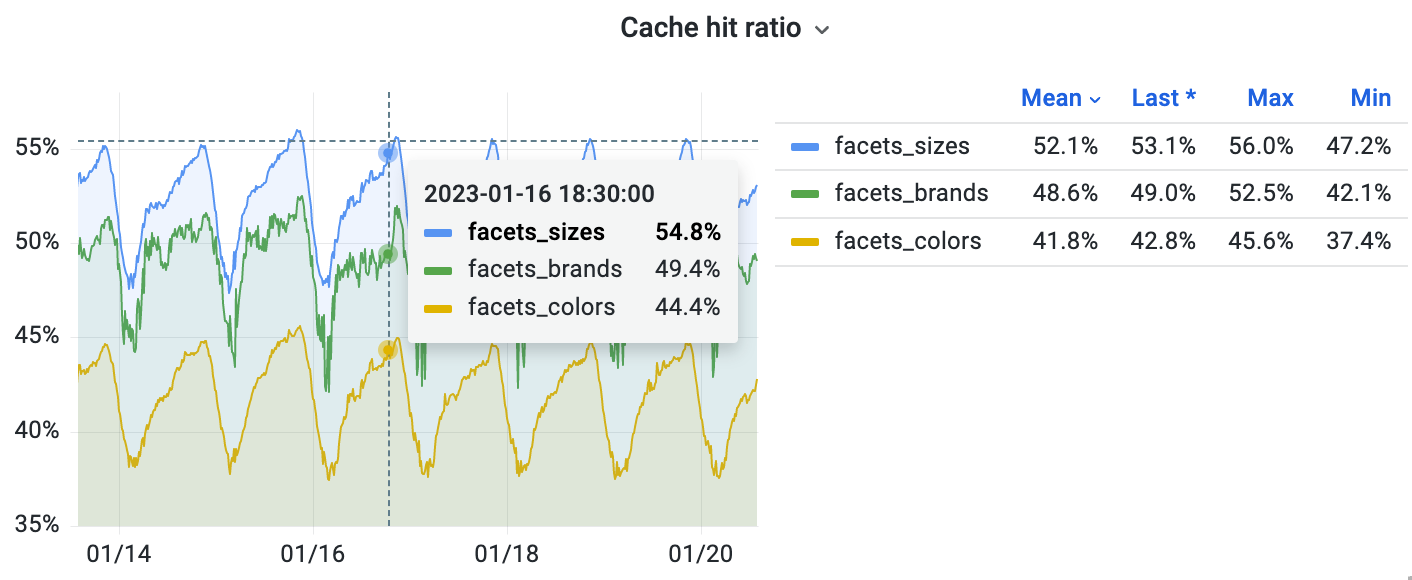

Caching results

We were able to achieve a cache hit ratio of approximately 50%, which means that half of the facet requests are served from the cache and don’t require a call to Elasticsearch.

Handling Multi-Select Facets

Multi-select facets present a challenge in faceted search because they require counts for options that are not currently part of the result set to be shown. For example, even when a user filters for black items, the counts for other colours such as grey and white must still be displayed. This means that the counts for multi-select facets cannot be determined solely based on the current result set.

This means that we can’t just calculate multi-select facet values based on the current result set.

For each of the facets with applied filters, we have to query with all filters except for the filters on the subject facet.

In other words, we need to ignore the filter applied to the current multi-select facet, but still take any other filters that have been applied into account .

Implementation

Instead of making a single request to Elasticsearch to retrieve all facets, we make separate Elasticsearch requests for each facet and exclude the filter applied to that particular facet in each request.

For example, when requesting the colour facet, we exclude the applied colour filter to show all possible colours.

Results

In conclusion, we’ve successfully implemented faceted search on our platform for the size, colour, and brand filters, allowing users to easily refine their search results based on these criteria. We’re pleased to report that the feature has been well received by our users and that we were able to achieve ~120ms P99 latency and ~16ms average latency. We’re also excited to announce that we’ll be extending this feature to include more filters in the future as we continue to provide a seamless search experience for our users. Congratulations on making it to the end of this post!