Vinted Vitess Voyage: Chapter 1 - Autumn is coming

“Winter is coming” - Ned Stark, Game of Thrones

Au contraire, autumn is the season to warn us of upcoming challenges - the most extreme growth and workload period of the whole year. In fact, we coined the term - ‘Vinted Autumn’. Our main MySQL databases were taking a massive beating every autumn despite sharding them vertically multiple times. While managing a dozen physical servers is an ok task, managing 42 servers manually is quite cumbersome. Additionally, the process of vertical sharding itself was increasingly hard to orchestrate and there was no way of turning back. Hacking around a horizontal sharding solution was not an option either. Naturally, we wanted to bring in tools to efficiently manage MySQL.

Vitess became the eighth CNCF project to graduate in November 2019. It did promise all the nice bells and whistles to improve painful vertical sharding and the possibility to shard horizontally. After all, Vitess was created to solve the MySQL scalability challenges that the team at YouTube faced. So, 2019 marked the start of our Vitess Voyage and this is the first in a series of chapters sharing the story.

The Beginning

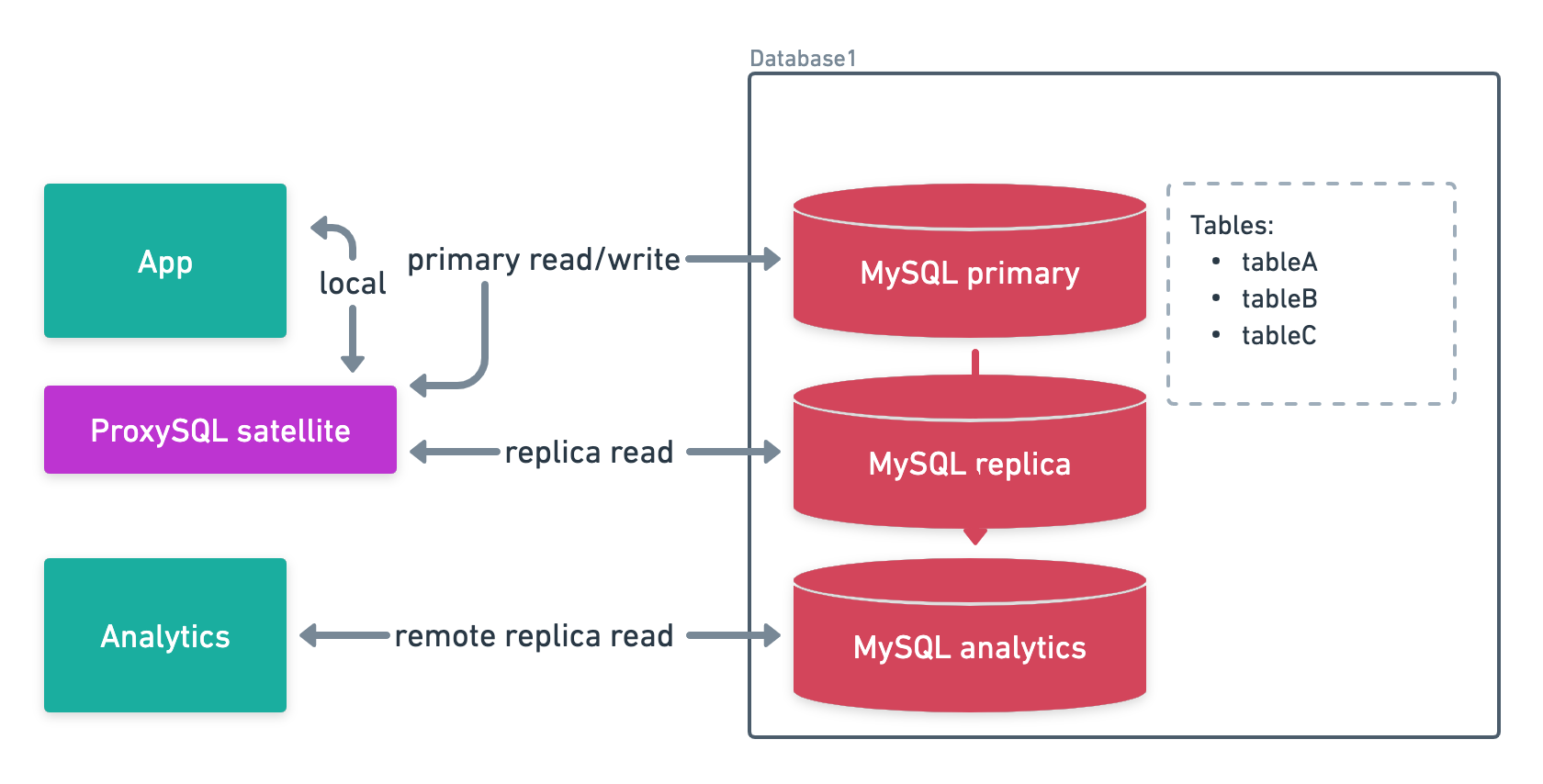

Different countries had their own Vinted portal with separate deployment and a separate set of resources. We’ve already been using functional sharding in our monolithic Rails application for some of our largest portals for years. We used a relatively complex ProxySQL cluster setup of core and satellite (aka replicas) nodes with a couple of routing rules to send queries to appropriate primary or replicas of target functional shards. All satellites were running on application servers to minimise network hops. Our analytics applications were using dedicated functional shard replicas (Fig. 1).

Vertical sharding is a process where some tables from a single functional shard are moved to another functional shard.

Horizontal sharding is a process where rows of some tables are spread over multiple shards on the same functional shard.

Functional shard is a group of tables that are closely related and expected to reside in a single database (and potentially share the same connection objects), while tables from different functional shards may be located in different databases.

Figure 1: ProxySQL functional shard

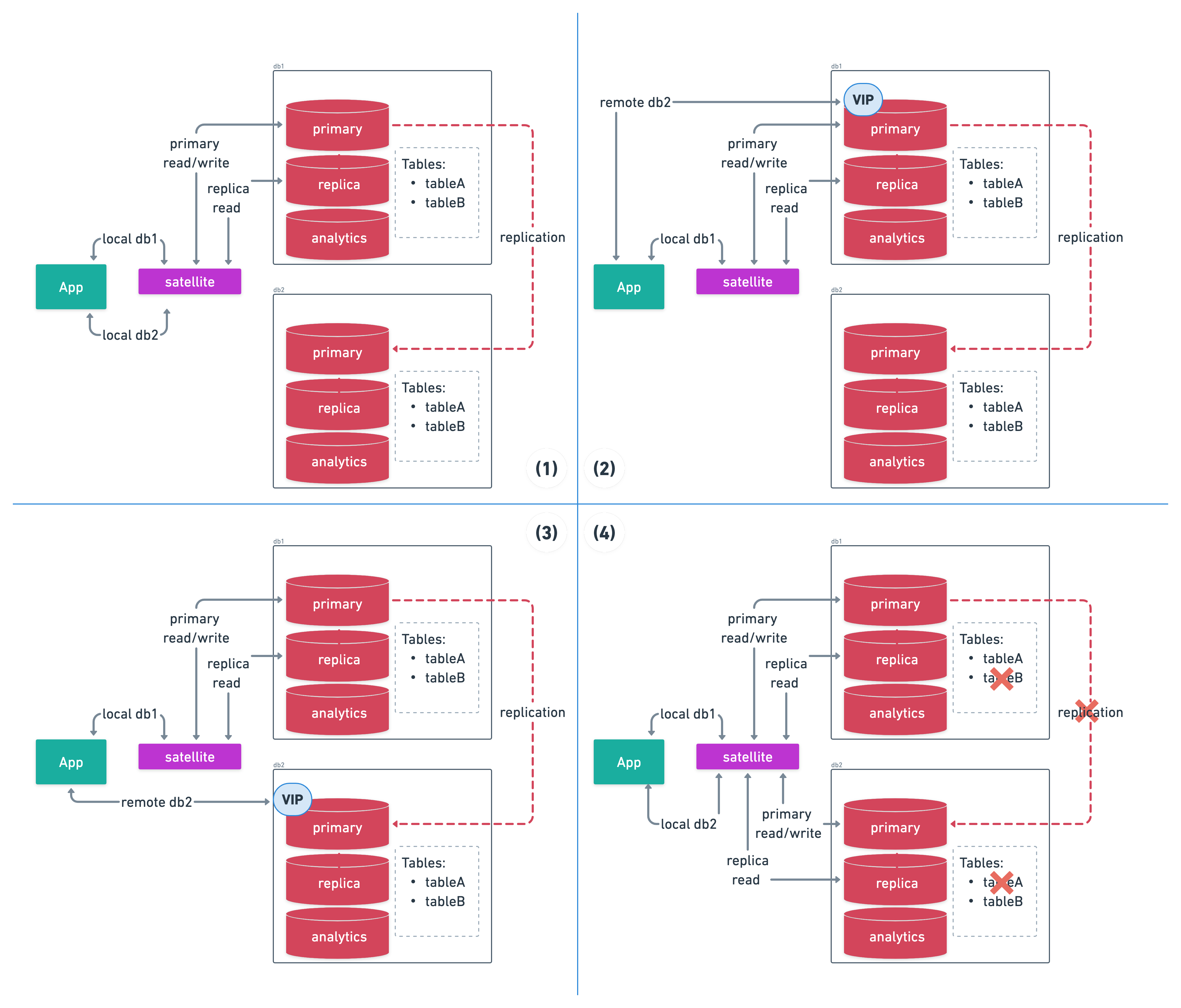

The vertical sharding process was quite laborious and prone to errors (Fig. 2). The following steps summarise the overall process:

- Preparation

- Monitor and fix queries that cross functional shard boundaries

- Remove joins

- Refactor transactions

- Create new MySQL

db2cluster with primary replicating fromdb1cluster - Configure application with new functional shard

db2which reusesdb1connection

- Monitor and fix queries that cross functional shard boundaries

- Separate connections

- Assign VIP (IP alias) to

db1and switch functional sharddb2to use it

- Assign VIP (IP alias) to

- Final switch

- Perform IP alias switcheroo from

db1primary todb2primary

- Perform IP alias switcheroo from

- Cleanup

- Stop replication from

db1todb2 - Delayed drop of

tableBondb1 - Delayed drop of

tableAondb2 - Configure new ProxySQL functional shard and switch applications to use it instead of VIP

- Stop replication from

Figure 2: ProxySQL vertical sharding

Despite the relative simplicity of the approach, it had several drawbacks:

- Human error - misspelled, malformed or out of order executed commands could lead to downtime;

- Cluster

db1had to be able to hold at least double the number of connections due to VIP; - Both primary and replica reads were forwarded to VIP, thus creating even more pressure on

db1; - Connections to VIP were created much slower compared to ProxySQL;

- After

Step 3there was no turning back without downtime.

Eventually, we reached the point where vertical sharding was only possible by turning off asynchronous jobs and reducing running application instances during the lowest load hours. To rub more salt into the wound of issues, any small bug or even super popular seller could cause connection storms on some MySQL primaries. There were just too many applications and ProxySQL instances. Some tables were terabytes in size and no longer migratable. Lastly, shards were already running high-end hardware.

Luckily, our Platform and SRE engineers have been preparing for such a ‘Vinted Autumn’.

The Voyage of 2019

Proof of concept

It was the beginning of 2019 and Vitess had already moved past the v3 version. Our SRE brewed a Vitess chef cookbook and made it available for public. With the help of the platform team, we tested a single portal with a single functional shard. After several Cups of Joe and code fixes it ran without a hiccup. However, the proof of concept only tested a subset of portal features and the load was too small.

At the end of the summer, I joined Vinted with an enthralling challenge to help slingshot the Vitess project. ‘Vinted Autumn’ was already foretold, so I had my hands on the old school vertical sharding early on. Shortly after, I dug into Vitess.

“We’ve made a cunning plan on how to test the main portal load on Vitess. Firstly, we’re going to collect all MySQL requests to a log cluster and then use these same requests on Vitess to see if there are any bottlenecks” - Vinted SRE

Our newly formed SRE Databases team started preparing the tooling for a Vitess performance test. In essence, we were going to collect all of the MySQL queries and try them out on a test cluster. The opportunity to test Vitess with a real load would give us more confidence to move forward with the project. Also, we wanted to be able to check different negative scenarios, such as server issues, master failover, and faulty migrations.